Data Exchange Integration

The Assetic REST API allows repetitive bulk data imports to be automated via Data Exchange.

The basic steps are:

- The API endpoint POST /api/v2/DataExchangeJob to execute Data Exchange for the uploaded document against a saved Data Exchange profile

- The API endpoint POST /api/v2/DataExchangeJobNoProfile to execute Data Exchange for the uploaded document by specifying the module and category and utilising field name automapping

3. Verify success/identify data issues via the API endpoint GET /api/v2/DataExchangeTask/{id}

- Optionally retrieve the results or error file by using the document GUID contained in the response from GET /api/v2/document/{id}/file, within either of the 'OutputDocumentId' or 'ErrorDocumentId' response properties.

1. Upload Document

Data Exchange requires the uploaded document to be in csv format. The document is uploaded to Assetic via the API endpoint POST /api/v2/document.

After uploading the document the API will return the GUID of the uploaded document in Assetic in the response. This is used in the next step.

Refer to the article Uploading Files for how to upload the file. Note that the parameter 'ParentType' can be set to "DataExchange" to assist with filtering.

2. Execute Data Exchange Job

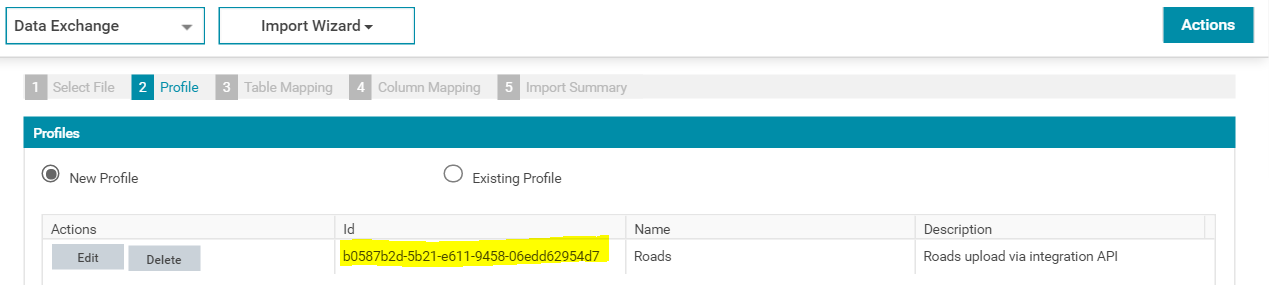

The following is a sample payload for the Date Exchange job API endpoint POST /api/v2/DataExchangeJob:

{ "ProfileId": "115ee90b-7ede-4e00-8ad2-0001f6646bab", "DocumentId": "cd6544b3-3031-e711-80bc-005056947279" }

Job Payload - No Profile

If the API endpoint POST /api/v2/DataExchangeJobNoProfile is used, it requires the generated document ID from Step 1, and the column header names in the source file must match those defined by the appropriate Template File from the Data Exchange Import Wizard, so that the field automap function will work. Information about the Data Exchange Template Files can be found here.

The values for 'Module' and 'Category' are the internal system identifier values, instead of the display values. For example use:

"Module": "NetworkEntity"

instead of

"Module": "Simple Asset Groups"

A list of display values-identifier values mappings have been compiled and are available here to aid in constructing the payload.

The following is a sample payload for the Data Exchange job API endpoint POST /api/v2/DataExchangeJobNoProfile:

{ "DocumentId": "cd6544b3-3031-e711-80bc-005056947279", "Module": "Assets", "Category": "Land" }

3. Track Data Exchange Job

The Assetic platform shows Data Exchange History which allows the user to view the status of a Data Exchange task. Any Data Exchange tasks initiated via the API are also visible on this page.

In addition to viewing the tasks in the UI, the API endpoint GET /api/v2/DataExchangeTask/{id} allows the integrator to retrieve a task's details to assess the outcome of the job and determine if the data was successfully imported.

Response

An example response from the GET /api/v2/DataExchangeTask/{id} endpoint can be seen below:

{

"Id": "21403f71-5533-4437-8aac-38ba3e311fe4",

"JobName": "Assets CATemplateSWLowPressurePipe",

"Type": 1,

"Stage": 7,

"Status": 3,

"StatusDescription": "Completed",

"SysBackgroundWorkerId": "663474e3-6c20-4634-888c-5874fb92c172",

"RequestUser": "Roger Federer",

"StartTime": "2023-01-18T11:12:37",

"EndTime": "2023-01-18T11:12:39",

"Summary": "2 New Complex Assets Added\n0 Complex Assets Updated\n0 Failed to import\n",

"DataExchangeProfile": "efbb4eb9-3725-457f-8478-9a1976557776",

"Module": 0,

"TargetType": 41,

"SourceType": null,

"SourceInfo": "<DocumentId>8f21ec9c-12e9-4e1b-a875-b67f3134d17d</DocumentId>",

"TargetName": "CATemplateSWLowPressurePipe",

"ErrorDocumentId": null,

"OutputDocumentId": "12111741-56f5-4f15-98db-7d957cb04c68",

"Message": null,

"_links": [],

"_embedded": null

}

Some key pieces of information returned in the above response from this endpoint are desribed below:

- Status:

As a system background worker manages the execution of all Data Exchange jobs, a job requested via the API could be in one of the various progress statuses when queried. The 'StatusDescription' property of the response can be used as an indicator of the job's current status, for example - "Pending", "In Progress", "Completed", or "Complete with error(s)".

These statuses can be used to determine if a job has finished, or is still being processed by the background worker task. - Result File and Error File:

On completion of a Data Exchange job, the system will generate a Result file that is linked to the task within the 'OutputDocumentId' property.Note: For Data Exchange imports that create entities in Brightly Assetic where the Auto-Generated ID configuration is enabled, the Result File will also contain an additional column containing the system-generated identifier. This applies to imports for Assets, Components, Functional Locations, Simple Assets, and Simple Asset Groups.If an error occurs during the import an error message is generated and added to a new "Error" column, which is appended to the end of the original csv import file. Depending on the type of Data Exchange import a GUID reference to the file containing the error messages can be found within the "OutputDocumentId" or "ErrorDocumentId" properties of the response.

If a GUID reference for a file is contained within the "OutputDocumentId" property, this file reference will contain all rows of the original file (including successfully processed rows) but in instances where a row failed to be processed an error will be included in the appended "Error" column. However, if a file GUID is contained within the "ErrorDocumentId" property, only rows that were unsuccessfully processed are included in this file.

The data of the results file or error file can be downloaded using the endpoint GET /api/v2/document/{id}/file by providing the GUID in place of {id} in the request endpoint. - Summary:

The "Summary" property of the response reports a general overview of the processing of a task. This property can include details such as how many rows of a file were successfully or unsuccessfully processed. It can also contain other helpful information such as errors that may have prevented a file from being processed, such as due to a missing required field or an invalid data format. In situations like this where an error is due to pre-validation, no error file may have been created and linked to the Data Exchange task and the information found here may be useful in understanding the issue with the import.

Sample Script

A sample python script demonstrating the automation of bulk data uploads is provided in this article on the Integration SDK page.